The evolution of virtualization and cloud platforms combined with mature adoption of agile methodologies and DevOps has impacted the way organizations build and ship software. The traditional waterfall-style approach that ended with developers throwing code “over the wall” to the operations team has been replaced with automated testing, continuous integration, and continual deployment. What was once considered only possible for “unicorns” (see http://bit.ly/unicornwat) is now within reach of any development shop willing to leverage open source tools. One powerful concept that transformed development and is now changing the way large companies approach production software is called Docker, or generically, “containers.”

A Question of Layers

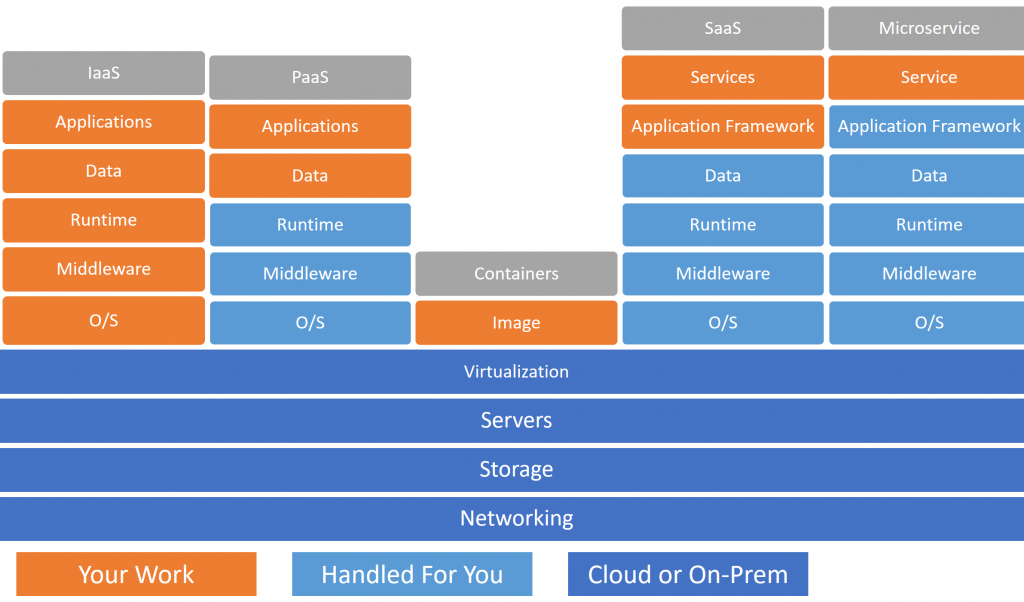

To better understand containers and where they fit, it is important to have a basic knowledge of the various “as-a-Service” or -aaS layers that exist today. Although the list continues to grow, three service layers have fundamentally transformed cloud technology.

Infrastructure-as-a-Service (IaaS)

IaaS is probably the most well-known service layer because it is the easiest to implement (but also tends to be the most expensive). It refers to managing infrastructure as a service rather than relying on physical hardware within the organization. For example, virtual networks and virtual machines are both examples of IaaS components. Through on-premises hypervisors like VMWare and in-cloud providers like Amazon AWS and Azure, technology professionals are able to create resources like web and application servers “on the fly.” IaaS removes the headache of capital expenditure and having to acquire, configure, and deploy physical resources by turning the provisioning of assets into an operational expenditure instead.

The negative aspect of IaaS is that the owner of a virtual machine is still responsible for configuring the operating system, installing software dependencies, and patching security flaws. The relative effort of managing the environment and aspects of the virtual machine is very high compared to the business value the machine provides through the functionality it hosts. In terms of deploying software, there is risk that the application may get installed to a machine that is missing a key dependency and can cause “stable production software” to fail.

Platform-as-a-Service (PaaS)

PaaS is an approach that Microsoft bet on early with Azure and unfortunately experienced slow adoption. In PaaS, a platform itself (such as a web server or application service) is provided as a service and the provider deals with the nuances of the underlying operation system host. In other words, the consumer of PaaS can focus on the application itself rather than worry about maintaining the environment. This eliminates the need to worry about dependencies because the platform is already managed and any additional dependencies the software may have are shipped as part of the deployment process. PaaS is typically much lower cost to operate compared to IaaS because fees are based on usage of the application itself and not on the underlying operating system or storage. In other words, you don’t pay for an empty virtual machine that is not doing anything and only for an active application that is being used.

Perhaps one reason PaaS did not experience rapid adoption despite the lower cost is the fact that the majority of PaaS offerings are tightly coupled to the host platform. In other words, to leverage PaaS on Azure means building .NET websites leveraging technology like ASP.NET and MVC. Traditionally, alternative solutions like Node.js were not compatible with the service. Customers wary of “platform lock-in” or with incompatible codebases were therefore unlikely to participate.

Software-as-a-Service (SaaS)

Software as a service is a model that is more focused on the consumer than the producer. The key to IaaS and PaaS is how an organization is able to deploy and publish assets to their environment. SaaS is more involved with how services can be consumed. Flowroute® is the perfect example of the SaaS model. As a consumer, you do not have to worry about how the Flowroute APIs are hosted, what language they were written in, or how they were made available over the Internet. Instead, you are provided with a well-defined set of services exposed as API endpoints that you can leverage directly to manage your telephony.

Figure 1 illustrates several of these services in terms of what you “own” and maintain. You may notice two unique stacks: the “microservice” (a further refined version of SaaS), and the container. What is a container?

Figure 1: Service Stacks

Containers

A container can be considered a “standard unit for deployment.” It represents a function that includes the code you write, the runtime for the code, related tools and libraries, and the filesystem. In other words, a container encapsulates everything needed to run a piece of software. What makes containers so powerful is that a container will always run exactly the same regardless of its environment. This gives it the flexibility of PaaS without an affinity to a particular provider.

The benefits of containers include:

Consistency – everything is encapsulated in a single unit, so you don’t run into issues with missing dependencies when you deploy code

Size – containers are typically only megabytes in size (compared to gigabytes of storage needed for typical virtual machine images) so they can be stored and managed in a repository and shipped across the network

Cross-platform – containers can run on all of the major platforms and across different cloud providers (the same container will run equally well on Azure and Amazon AWS Container Services)

Resiliency – because containers share a kernel with the host that is already running, they can spin up quickly and are therefore ideal for recovery (i.e. a new container can be quickly spun up when an existing container crashes)

Elasticity – containers can run as clusters and quickly “fan out” to accommodate increased requests and then “scale down” during idle periods – this can result in significant cost savings

The leading container host is Docker and current container standards are based on Docker images. You can find an installation of Docker for your platform online at https://www.docker.com/. Docker deals with two primary concepts: an image that defines the components needed for a container, and a container which is a running instance. When you run multiple containers they are always based on a single source image. For developers, think of images relating to class definitions and containers as runtime instances of the class.

Consuming Containers

Consuming containers is very straightforward. Flowroute provides several containers, including:

Phone Number Masking SMS Proxy: http://developer.flowroute.com/quickstarts/phone-number-masking/

Two-factor Authentication: http://developer.flowroute.com/quickstarts/two-factor-authentication/

Appointment Reminder: http://developer.flowroute.com/quickstarts/appointment-reminder/

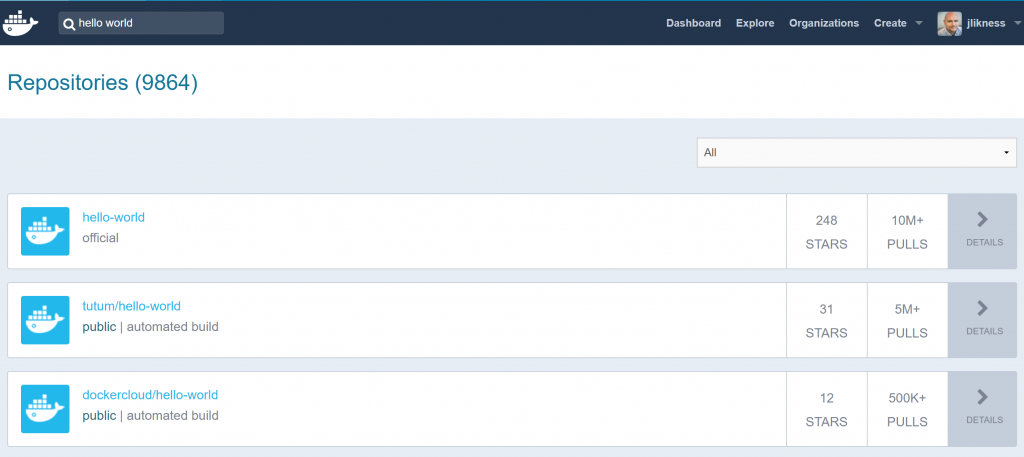

To get started, however, you can begin with a simple “Hello, World” example. Docker hosts a registry of trusted images online at https://hub.docker.com/. There you can search for images that do everything from providing output to running complex web servers and hosting language services. Type “hello world” in the search box on the Docker hub and you should get several results as shown in Figure 2.

Figure 2: Docker Hub

The first image has over ten million pulls which means the image was loaded to a local Docker instance over ten million times. After you click on the name of the image it provides instructions on how to use it. The easiest way is simply to type in a command line:

docker run hello-world

This will search for a local copy of the image. If it doesn’t find it, the image is pulled from the repository and then a running container is created from the image. You could have pre-loaded the image as well with the command:

docker pull hello-world

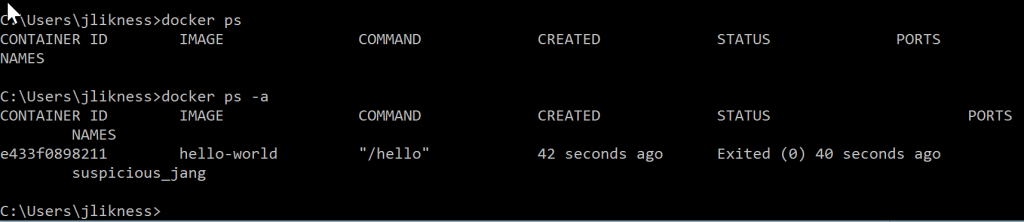

If everything goes well, you should see a message that begins with, “Hello from Docker!” In this case, the container ran and immediately exited. If you list running containers, you shouldn’t see any:

docker ps

However, if you add the -a switch for “all, including stopped containers,” you’ll see a container id for the hello-world image. Figure 3 shows what it looks like for me:

Figure 3: Docker PS

There are two ways to “clean up” running containers. The first and most specific way is to use the remove command with the container id, like this:

docker rm e433f0898211

A more comprehensive way to clean up is to use the new “prune” command:

docker system prune

To remove an image, you simply use the “remove image” command with the image name, like this:

docker rmi hello-world

Now that you are familiar with pulling and running Docker images, you are ready to learn how to build one of your own.

Build Your First Container

Let’s take the command line tool described in the blog post “Command Line Telephony with Flowroute” (https://blog.flowroute.com/2017/01/04/command-line-telephony-with-flowroute/) and containerize it. If you haven’t already built the example, get it up and running with these steps:

1. From your root folder, grab the Flowroute numbers SDK:

git clone https://github.com/flowroute/flowroute-numbers-node.js.git

2. Make a directory for your new project:

mkdir flowroute-cli

3. Copy the library for the SDK in to your project:

cp -r flowroute-numbers-nodejs/flowroutenumberslib/lib flowroute-cli/lib

4. Inside the directory you created, create two files (make sure flowroute-cli is your working directory):

package.json fr.js

5. Populate the json file with the contents of this gist: https://gist.github.com/JeremyLikness/4167d97dda7bceb20c7cb017027075f3

6. Populate the js file with the contents of this gist: https://gist.github.com/JeremyLikness/0b384d63dcb5d404dc3866e30ccdd2b7

7. Install dependencies:

npm i

Finally, test that the program works by running this command (retrieve your access key and secret key from the Flowroute developer site here: https://manage.flowroute.com/accounts/preferences/api/):

node fr.js -u <access_key> -p <secret_key> listNPAs

If you did everything correctly, you should see a list of area codes. To create the container, Docker uses a special file named Dockerfile. You can read more about this file online here: https://docs.docker.com/engine/reference/builder/. The file contains a list of commands that explain how to build the image. Most images are layered, or built on top of other images. Each time you execute a command in the file, a new image is built based on the previous, until all commands have been run. The final image is the one you work with.

For this image, a special Node.js image can be used as the starting point to dynamically build a target image based on the project code. Create the file (no extension) and populate it with these commands:

FROM node:6-onbuild ENTRYPOINT ["node", "fr.js"]

That’s it! The command leverages a Node.js 6.x image with a special “on build” tag to build the project, install dependencies in the container, then package it up. The entry point tells it that when the container is run, it should call node to launch the command line interface program and then pass in any arguments. Save the file, then build the image like this:

docker build -t flowroute-cli .

The build command instructs Docker to read the configuration file and create an image. The -t switch “tags” the image with the name flowroute-cli. The last command is a context, or the directory for Docker to work from, which is passed in as the current working directory.

After the image builds you can run a Flowroute command in the container. To convince yourself it’s not simply running the local program, change to a different directory. Now run the following:

docker run -i flowroute-cli -u <access_key> -p <secret_key> listNPAs

You should see a list of area codes. You now have a fully encapsulated version of the command line interface that you can run on any environment with a Docker host! No Node.js installation is required because all of the dependencies are contained in the image. You can see the image by running:

docker images

You should see an image around 660 megabytes in size. You will also see some other images such as a “node” image that were used as base layers to build your custom image. If you run the Docker “ps” command with the “-a” switch you’ll see some discarded containers used to execute your commands. You can either remove them individually or use the “system prune” command to clean-up.

Summary

There is much more you can do with containers. For example, you can publish images to a repository and pull them down to share with other developers. Docker compose allows you to orchestrate multiple containers and Docker swarm enables you to manage clusters of containers. In previous years, containers have exponentially increased in adoption for development workflows. More recently as part of DevOps, organizations are leveraging containers in production using services like Kubernetes, Azure Container Services, and Amazon EC2 Container Service. Mature organizations no longer ship “production source code” but instead move production images through staging, QA and into production. You now have a practical, hands-on jumpstart to leveraging containers yourself!